Generative AI Landscape Series #1: Foundation Models

High-level overview of the entire stack, and an initial dive into the foundation model layer

Generative AI is all the craze these days, but it’s been hard keeping track of all the blazing-fast developments in this space (in fact, if I had written this article even two months ago, the content below would’ve probably looked quite different). In trying to understand the landscape, I’ve found articles tend to either offer very high-level overviews without diving into specific players, or they’re highly specific to one facet of one player, with very few pieces that strike the balance in the middle. My intent with this series is to offer my understanding of the important players and dynamics in each part of the stack so that the overall picture is clear and detailed.

Each piece will dive into one section of the Generative AI stack. Before that, however, let’s zoom out to see what the current stack even looks like.

What’s the Stack?

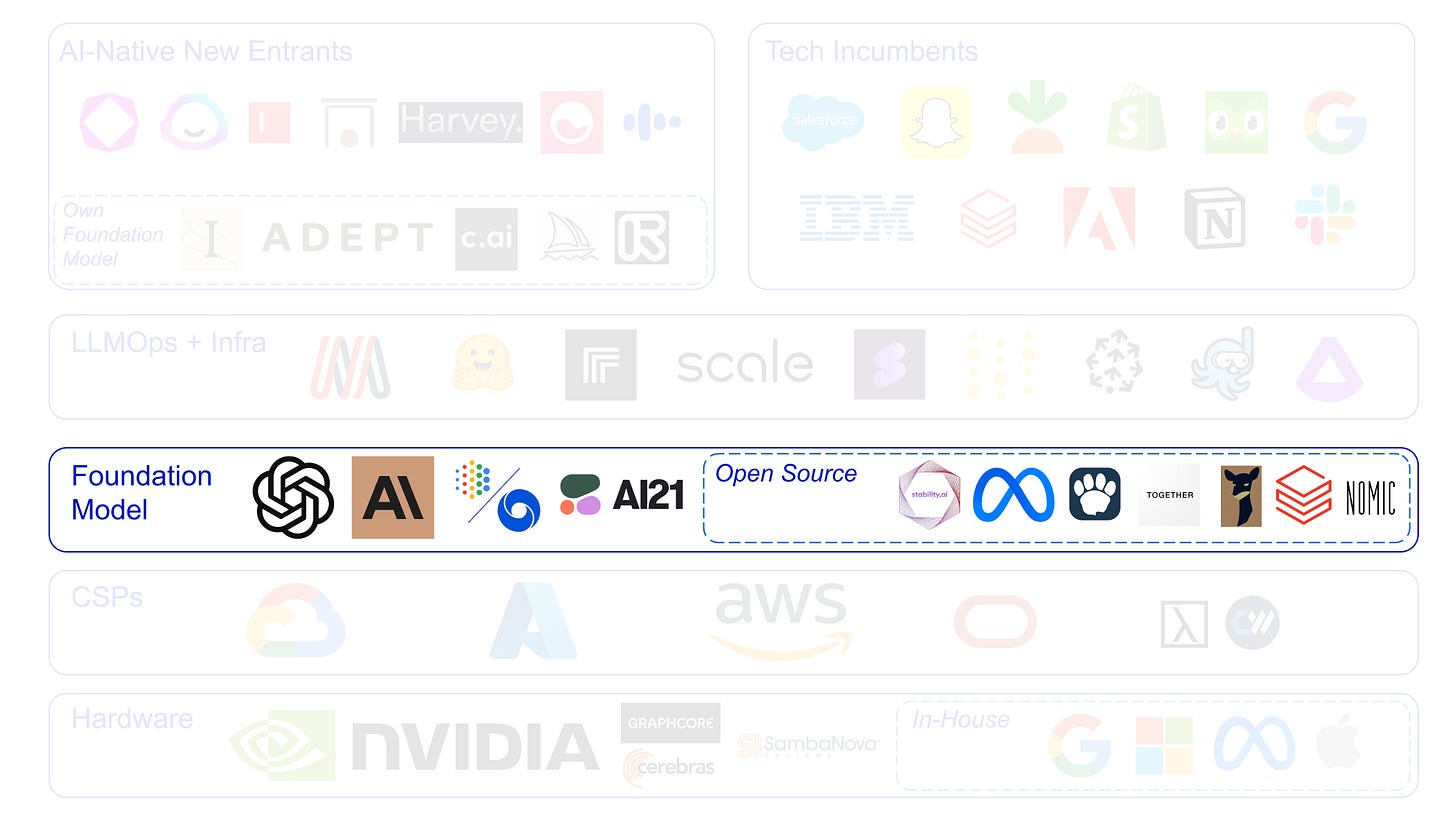

There are a million variations of this map, but here’s my spin on it:

Below are brief overviews of the primary categories, but keep in mind these are gross simplifications and in reality, dynamics are much more complex and fluid both within and between categories.

Hardware: The atoms powering the world of bits. These players make your actual computing hardware, with Nvidia enjoying a highly-dominant position in the GPU market (~80% according to some estimates, possibly even higher in the high-end, AI-training segment). Other incumbents like Intel and AMD are trying to play catch-up, while start-ups like Cerebras or SambaNova attempt to compete mostly through novel chip architectures, although none of them have proven reliably superior to GPUs.

In-house chip designs from tech giants like Google's TPUs or Microsoft's alleged Athena chip probably pose the most significant “competition” to Nvidia. However, the development of in-house chips is likely confined to a set of 5 - 6 companies with the requisite capital to invest in proprietary hardware.

CSPs (Cloud Service Providers): The Big-3 CSPs (AWS, Azure, GCP) still form the backbone of AI computing. Even the largest AI labs like OpenAI and Anthropic are at the end of the day reliant on their Cloud partner of choice, so much so that cloud credits have become a form of funding. They’ll generally continue to benefit from the explosion of AI workloads, regardless of who wins in the application layer. One interesting dynamic to see play out will be whether the CSPs’ own apps and infra win out due to vertical integration.

Oracle is a sneaky 4th player signing their own large workloads in the background. Otherwise there are smaller players like Lambda Labs and CoreWeave that specialize exclusively as GPU-clouds.

Foundation Model Builders: The groups actually training Generative AI models. In the closed-source world, the biggest names include OpenAI, Anthropic, and Google DeepMind. These companies created some of the most advanced models currently available, including GPT-4, Claude, and PaLM 2. Many companies opt to go primarily open-source instead, namely Meta, Stability AI, Eleuther AI, and more (including academic institutions). A big debate (discussed in a later section) raging in the AI community right now is “who will win between open vs. closed source.”

LLMOps + Infra: The “picks and shovels” of GenAI. This is a very broad category that can include anything like:

Vector databases e.g. Pinecone,

Labeling services e.g. Scale,

MLOps software e.g. Weights & Biases

Training platforms e.g. MosaicML

Similar to CSPs, these players will generally benefit from activity in AI regardless of who wins more downstream.

AI-Native New Entrants: The hundreds if not thousands of AI startups that have seemingly sprung up since the release of ChatGPT back in Nov’22. These start-ups generally live in the “application layer” of the stack, or the products that are the most consumer-facing. I’ve also included the category of companies that actually train their own foundation models, but have a primary product that’s more consumer-facing and less API-driven1. Examples include Character.ai, Inflection AI, Midjourney, and Adept AI (many founders at these companies tend to be past influential AI researchers).

Tech Incumbents: The old guard of tech companies that are now in an “add AI features or die” mode. Everyone has AI features. Everyone. The big dynamic here playing out is whether startups in the application layer have sufficient moats, or if all their products will just become features in larger incumbent products.

Model Builders and Their Strategies

In this piece, we’ll focus on just the “Foundation Model Builders” and what some of their strategies seem to look like. I will caveat though, although foundation models include baseline models of any modality (e.g. image → Midjourney, DALLE, text → GPT-4, Claude), this article leans heavily into text models, or LLMs, that so far seem to have broader transformative implications2.

OpenAI: The “leader of the pack”, if not technically then at least in publicity. ChatGPT arguably sparked the entire GenAI craze, and GPT-4 is still the best publicly released model to date3. Even in academic research, ChatGPT (which mostly refers to the older gpt3.5-turbo model unless explicitly stated as GPT-4) is still the gold standard to which teams benchmark new model releases. This can be reflected in their eye-watering $29B valuation, largely driven by $10B+ of Microsoft funding (although half in the form of Azure cloud credits).

It’s sometimes shocking to think that only four years ago did OpenAI transition from a non-profit research lab to opening a for-profit “subsidiary” (with Sam Altman taking the reins in the process. When asked in an interview in 2019 how OpenAI makes money, Sam replied:

“You know the honest answer is we have no idea. We have never made any revenue, we have no current plans to make revenue, we have no idea how we may one day generate revenue. We have made a soft promise to investors that once we’ve built this sort of generally intelligent system, basically we’ll ask it to figure out a way to generate an investment return for you.”

I have no idea if OpenAI ever ended up consulting their GPT models on business strategy, but fast-forward four years and their revenue mostly comes from two sources (with a third, speculative one I’ll talk about):

API: The most direct way OpenAI gives access to its models, and what initially made me believe OpenAI was more of an infrastructure company than a consumer-app company (that narrative has shifted a bit with ChatGPT). Individual developers and large enterprises alike consume tokens and are billed monthly on their variable usage. This is OpenAI’s most likely enterprise motion right now, where truly enterprise use cases will get reserved instances and SLAs, very similar to the cloud company business model. Enterprise motions tend to need a lot of hand-holding and customized deployments, which is why we’ll probably see more and more partnership motions like Bain x OpenAI that’ll help amplify OpenAI’s GTM (akin to how SAP and Salesforce implementation has an entire systems integrator ecosystem). Pricing on model APIs will likely see premiums on state-of-the-art models (e.g. GPT-4), while older model (e.g. GPT-3.5) pricing will see a race to the bottom to stay competitive with other proprietary providers and also open source (i.e. “hitting our API is cheaper than buying your own GPUs for inference”).

Subscription: This is the consumer-facing, $20/month, ChatGPT Plus subscription that gives users access to GPT-4 and other beta features like Code Interpreter + Plugins. With the explosive growth and popularity of ChatGPT that led to over 100 million users, I’m sure OpenAI realized slapping a subscription model on with premium features was a no-brainer. Even at a 1% free-to-plus conversion rate (which is typical), at $20/month → $240/year * 1M users already equals a cool $240M this year in subscription revenue4. OpenAI’s “lofty” goal at the end of Dec’22 was only $200M in 2023. One interesting direction I can see OpenAI taking is creating “enterprise-ready” instances of ChatGPT that are sectioned off from the public and can work with internal company data. This is one barrier that stops most people from seriously using ChatGPT at work, even though something like Code Interpreter is the holy grail tool for a business analyst like me. Like all enterprise SaaS though, it will take time to build, sell, and become security-compliant.

Plugins App Store: Plugins are a beta feature on ChatGPT that allows developers to create tools (essentially other API endpoints) that ChatGPT can use to augment itself. Examples include calling Wolfram Alpha for math problems, Zapier for cross-software automation, etc. When this feature was initially released, people were calling it the “app store” moment for ChatGPT, with OpenAI potentially being able to rake in plugin fees and maybe even monetize through subtle ads in its answers. However, the current state of plugins is still very unstable and unreliable, which Sam even admitted in a recent interview, so it remains to be seen whether the plugin business model can take off again with future model advances.

The diagram below illustrates what OpenAI’s product ecosystem currently seems to look like:

Overall OpenAI has pretty good product-market fit, and I wouldn’t completely bet against them making $1B by 2024, but a couple risks include whether 1.) AI features built on GPT are truly adding value to the bottom-line, 2.) OpenAI can meaningfully stay ahead of competitors technically, and 3.) fine-tuning on a powerful baseline open-source model is better ROI for an enterprise in the long run (which affords more control as well).

Anthropic: Anthropic was founded in 2021 by an off-shoot of OpenAI researchers that didn’t agree with the direction of the company at the time. Given that background, it’s no wonder that Anthropic’s talent density is immense, with an insane $1.45B in funding to boot. It likes to publicize its focus on safety and interpretability research, although ever since OpenAI’s ChatGPT release, there’s been a heavy push toward increased commercialization and GTM efforts on signing partnerships for its main product Claude. Likely there was investor pressure to catch up to OpenAI, not in terms of technical capacity, but in terms of making money.

Model-wise, Anthropic probably is on-par with or at least right behind OpenAI, with at least one type of leaderboard showing Claude between GPT-3.5 and GPT-4. Strategy-wise, leaked materials from their Series C funding deck seem to suggest that the “ultimate AI Assistant” is the long-term vision of the company’s research efforts, mostly through continuous scaling of larger and more powerful models that increase human-like capabilities. Commercially, this will likely translate into large licensing with a slew of enterprises, although I’d be shocked if Anthropic didn’t one day also release their own ChatGPT product to compete on the consumer landscape.

Unlike OpenAI, Anthropic has yet to seriously open up its product to the public, with the closest being the Claude Slack Bot integration or Quora’s Poe, with the Claude API very much behind a waitlist. Most of its current GTM seems focused on signing enterprise partnerships that integrate Claude as the foundation model behind different AI features, similar to OpenAI’s GPT-4 enterprise motion.

An interesting detail to keep an eye on will be Google’s considerable investment into Anthropic, and whether that came with strings attached to Anthropic’s partnership options, or whether it was very simply a cloud spend play. Given Google’s own competitive offerings to Anthropic (more below), I can’t imagine there being major restrictions, but Anthropic has yet to announce major GTM partnerships with any CSP.

DeepMind / Google: In the past, DeepMind and Google Brain were more or less purely research-oriented and drove a lot of the original innovation behind current model architectures. Recent developments have put Google in code-red due to potentially increased pressure from LLMs on their core search business model, and a generally perceived “lagging” in technical capability by the wider public. Now Google DeepMind is a merged entity consolidating its research efforts, which I’m guessing will increasingly have an applied business focus with fewer open publications in the future. Their entire job will be to create competitive models to power Google’s budding AI products (Bard, etc.).

Google’s strategy will be to embed as much AI into its existing products and services to avoid “disruption” in any segment. This year’s Google I/O is enough evidence of this, where PaLM 2 (Google’s most advanced LLM) is already powering more than 25 products and features. We’ll soon see AI in Google Workspace, Search, Photos, and basically every other major Google product to stave off any and all competition. It’s one of the lucky companies in the world with enough capital to subsidize these features while cost efficiencies figure themselves out on the inference end. Competing AI startups might have VC funding, but my guess is their pressure to monetize is much higher.

Zooming out, Google wants to prove that AI will be much more of a “sustaining” innovation (reinforces incumbent businesses) than a “disruptive” innovation (upends incumbent businesses)5. Essentially, it wants to turn every AI product into a feature, taking half of YC’s incoming class with it. If Google had its way, the AI landscape might look a little like this:

We’ll touch more on Google’s specific products in each part of the stack in future posts.

Cohere/AI21: Cohere and AI21 are both “smaller” LLM players that offer alternative foundation models to OpenAI/Anthropic/Google. I’ll focus on just Cohere since it’s the relatively more well-known (and well-funded) of the two, and they’re pretty similar in spirit6.

Cohere also offers easy-to-embed APIs for tasks ranging from classification to generation. It’s outwardly more explicitly focused on commercial use cases compared to the research outfit angle that OpenAI and Anthropic have. To put it another way, OpenAI/Anthropic read like “AI research labs that just happened to commercialize” while Cohere reads more like “we’ve been about the enterprise from day 1”. To that end, Cohere emphasizes production-ready deployment. From their recent Series C announcement:

“Cohere’s enterprise AI suite is cloud-agnostic, offering the highest levels of flexibility and data privacy. The platform is built to be available on every cloud provider, deployed inside a customer’s existing cloud environment, virtual private cloud (VPC), or even on-site, to meet companies where their data is. This empowers businesses to transform existing products and build the next era-defining generation of innovative solutions all while keeping their data secure.”

Existing flexibility, security, and privacy are how Cohere will pitch to businesses that are eager to build out AI capabilities while its competitors are trying to catch up in becoming enterprise-ready. Anecdotally, Cohere’s best “Command-xlarge” model might not be as strong as GPT-4 or Claude, so battling on fine-tuning flexibility and product maturity will be important. Cohere is also starting to build alliances with players in other parts of the stack that are not in the Microsoft/OpenAI or Google coalition. It’s no surprise then that it inked deals to deliver services through AWS and more recently Oracle. In effect, these partnerships will amplify Cohere’s GTM and provide a strong alternative foundation model partner to whoever needs it.

Tech incumbents building Open-Source: This category represents companies that are heavily contributing to open-source (mostly Meta) vs building proprietary models. Examples include Meta releasing LLaMA7, and Databricks with Dolly. The best thesis here is the strategy of “commoditizing your compliments”, which in a nut-shell means you want every part of the tech stack that isn’t your core product to be as competitive (and therefore cheap) as possible, thereby accruing more demand and value in your product instead. Microsoft did this well in the PC wars by not selling exclusive licenses, so while PCs got increasingly cheaper with dozens of vendors competing on the same basic design, Microsoft sold Windows to all of them and became the eventual household name that it is.

Clicking into Databricks, its core product is essentially a database + analytics tools. In the world of GenAI, its core motivation is obviously to have as many people develop on their platform for LLM workflows, which is really only viable if companies are developing on top of open-source models. In the OpenAI monopoly world, its API and tools are the only things app companies would ever need to worry about, thereby abstracting away the Databricks layer.

In the Dolly announcement post, Databricks isn’t even subtle about this:

We are open-sourcing the entirety of Dolly 2.0, including the training code, the dataset, and the model weights, all suitable for commercial use. This means that any organization can create, own, and customize powerful LLMs that can talk to people, without paying for API access or sharing data with third parties.

That last sentence is a clear jab at the OpenAIs/Anthropics of the world, and the release of Dolly is supposed to convey the feeling of “if we can create a viable open-source LLM, you can too!”

This framework can be applied to Nvidia’s NeMo toolkit for building on top of foundation models as well. Nvidia explicitly touts “Flexible, Open-Source,

Rapidly Expanding Ecosystem” to encourage as many developers to build on top of Nvidia hardware and infra as possible. If any and all layers upstream can be commoditized, thereby increasing demand for computing, then Nvidia’s data-center division will have an even bigger field day than the one it’s already currently experiencing.

Open Source vs Closed Source?

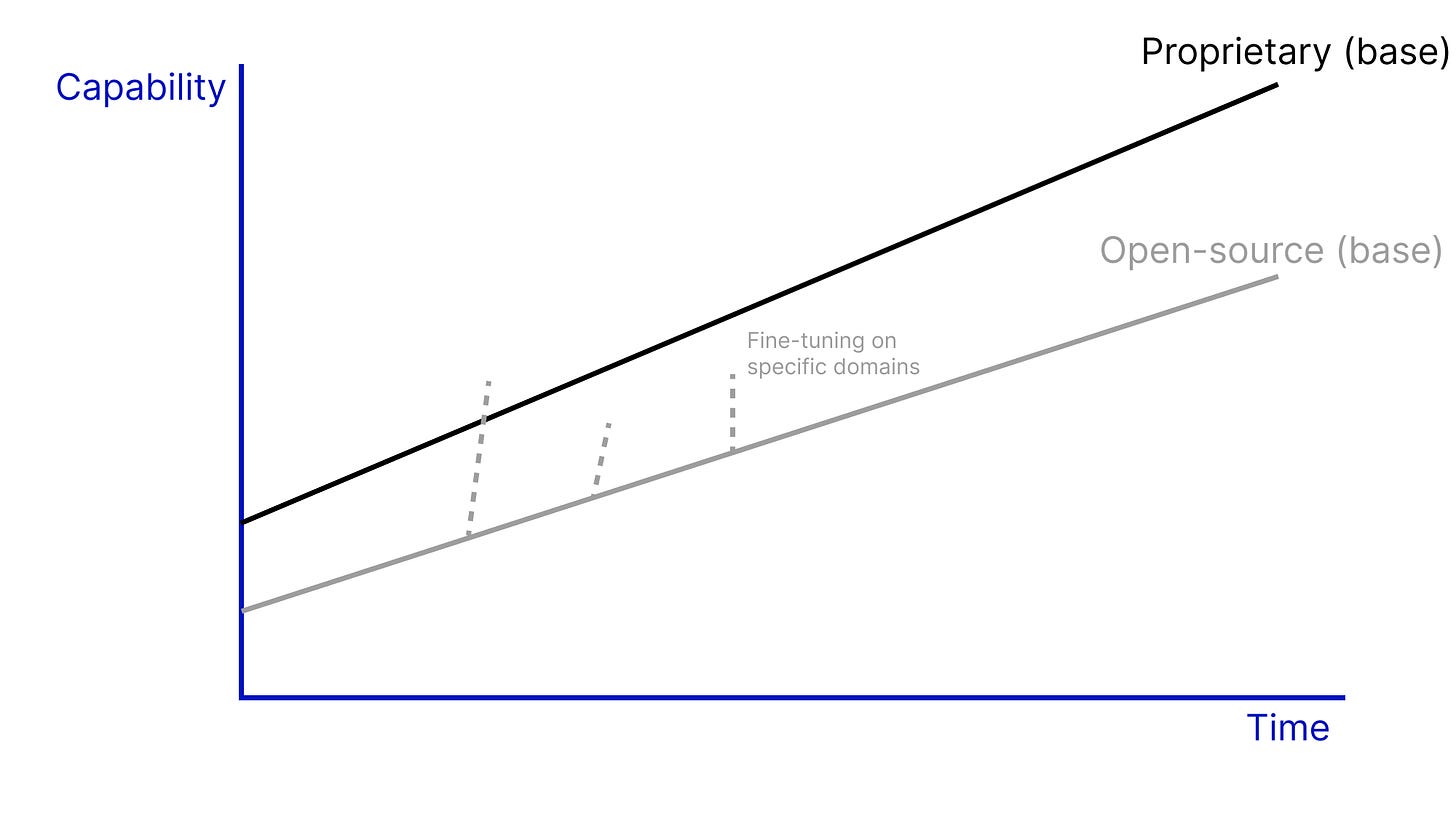

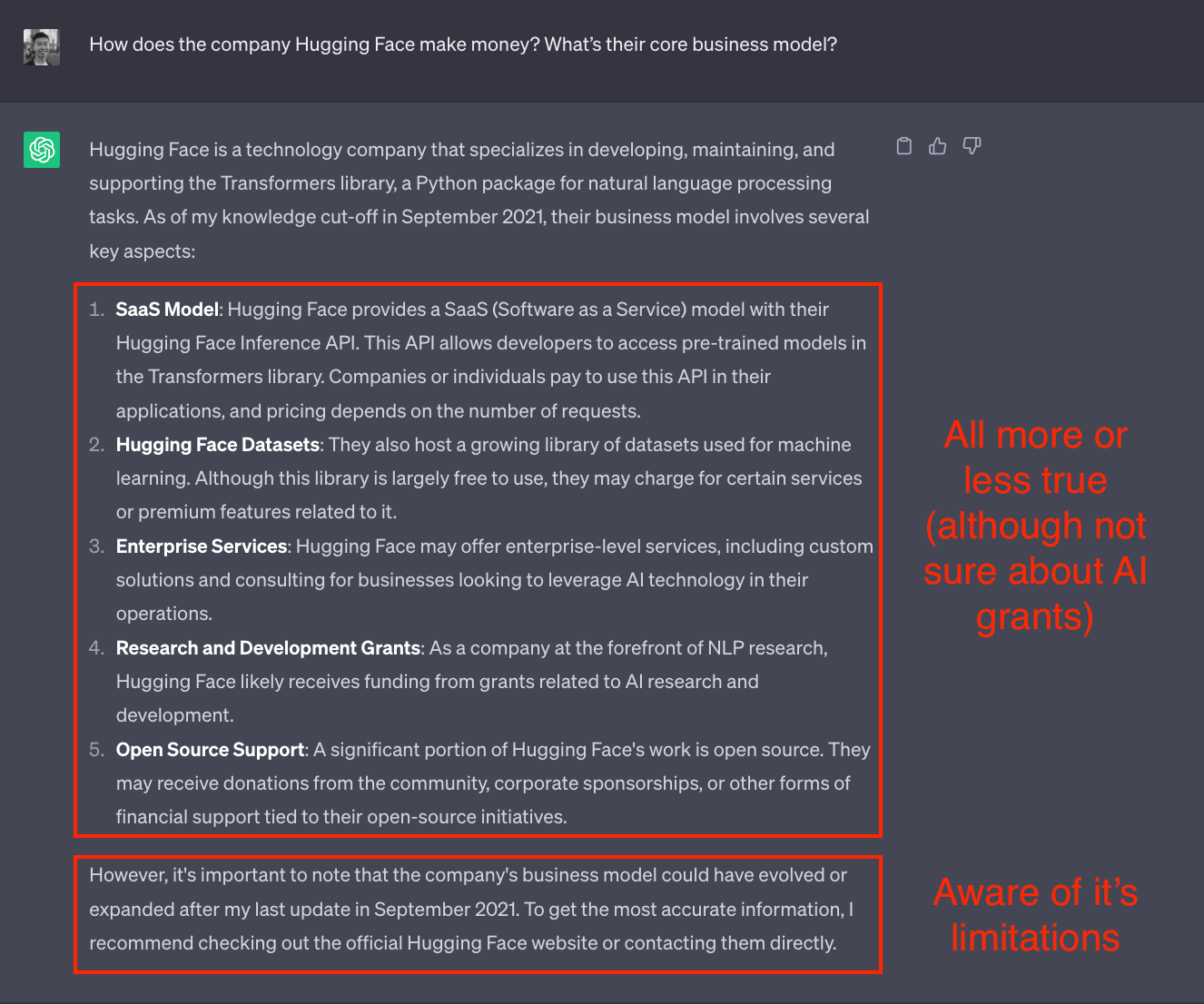

Open source vs closed source is worth a discussion here, since how this dynamic shapes out will affect the strategies and landscape of the entire foundation model layer. The range of arguments lives on a spectrum from very-bullish open-source “OpenAI has no moat, open-source will catch up in no time” to very-bullish proprietary “OpenAI will always be better and cheaper, there’s no point in trying to catch up”. As with most cases, the truth will probably turn out somewhere in the middle.

Let’s explore these three points on the spectrum.

Proprietary has no moat. This position is probably most exemplified by this leaked internal memo at Google that made rounds when first released. Without first going into academic explanations of why this is wrong, let me just illustrate a couple of examples between ChatGPT (GPT-4) and chatbots of some of the best open-source models around.

I asked ChatGPT and the new Falcon-40B-Instruct a small brain teaser:

Another example comparing ChatGPT with oasst-sft-6-llama-30b, which powers HuggingChat:

Yes, this is not scientific at all, and only covers chat scenarios of specific topics without diving into NLP benchmarks etc. etc. etc., the difference in user experience is undeniable. Some of the main challenges with LLMs are reasoning and hallucinations, and proprietary models tend to blow open-source out of the water here. Not to mention that lazy imitations of proprietary models are mostly folly.

So why is ChatGPT so good? Turns out talent concentration, data, and compute still matter a lot to model development.

Talent concentration: I’m kind of lumping both the literal amount of talented researchers an organization has, and also the engineering + research know-how that accumulates within the org, especially as the model builders pull back from releasing papers on their work (and thereby depriving the public of potentially break-through advances in architecture, training techniques, etc.).

Data: Lots of data, especially lots of good data, really matters. There are dozens of papers that verify this conclusion. The problem is, most public sources are already exhausted, with the entire internet basically table-stakes for LLM training data. How do you get more data then? Either have existing troves of proprietary data (e.g. Bloomberg GPT), or pay a ton of humans to create net-new high-quality data. Unless large models can soon truly self-improve recursively (in which case leading AI labs are likely to get there first anyways), the capital needed to spend on meaningful amounts of human-generated data will more and more be concentrated in the hands of the proprietary model builders.

Compute: You need astronomical amounts of computing power to train and run (inference) the largest models. Anthropic plans to raise $3 - $5 billion in capital in the next two years to stay competitive on model research. Not all of this will be strictly spent on model training and maintenance of course, but I suspect there are exponentially increasing costs to training stronger and stronger models in the future. Even OpenAI with its virtually unlimited $13B in funding (largely compute credits) from Microsoft struggles with hardware constraints right now. Players with high amounts of capital will hog as many computing resources as they can in the future, creating economies of scale, and furthering the divide between decentralized open-source players and concentrated proprietary ones.

Open-source is screwed. This is not really a fair position either. Large foundation models are very powerful out-of-the-box, but still lose to small, fine-tuned models on niche tasks those models are specifically trained to do. A lot of interesting fine-tuning is still predominantly done on open-source models, since the smaller sizes and direct model access make it much more “hackable” than any of the tightly-controlled proprietary models. Customizability will always draw the academic and developer worlds toward open-source,

Not all use cases require the best model either, just like buying a PC doesn’t require you to max out the specs if you’re not trying to run games in 4K. In those cases, using a slightly weaker open-source model will likely be cheaper and more flexible to maintain than a top-of-the-line proprietary model.

Both worlds will find their niche. Just looking at the history of other open-source/closed-source tensions, there’s never been a winner-takes-all scenario. MacOS and Windows (both proprietary operating systems) dominate consumer desktops, while Linux (open-source) dominates the server space. In mobile OSs, Android (open-source) takes up most of the market share, but iOS (proprietary) has never been handily beaten (which Apple’s market cap is a clear indication of). In both examples, it’s also important to note there are many complex factors beyond just the characteristic of open vs closed source that contribute to a product’s dominance.

I think this dynamic will play out in world of AI models too. The best proprietary models will likely always have higher baseline capabilities than the best open-source ones due to an accumulation of talent, data, and compute, and will be needed for tasks that require a high degree of accuracy and reasoning8. However, it’s much easier to build a diverse ecosystem around open-source models, so task and domain-specific models will likely thrive better under this system9.

This is very murky though, since you could argue OpenAI also belongs here since their main product is ChatGPT, which is definitely a consumer product. For now, I consider OpenAI more “infra” since there’s still heavy lip service to selling GPT-4 API as a product in and of itself, and not as a piece of product architecture.

I’m also aware that the future of foundation models is developing towards multi-modal, and that even calling GPT-4 a text model isn’t strictly correct given its image input capabilities. However, the current state of frontier models still has some pretty visible demarcations between LLMs and diffusion models for images for example. The distinctions are pretty justified until we truly have a “do-it-all” model that can do multi-modal input and output proficiently.

Academic benchmarks aside, in terms of anecdotal “vibes” on which models are good at what, I more or less agree with Ethan Mollick’s comparison of different chatbots here.

The real dynamics are of course more complicated. On the upside, 100M users was a point-in-time snapshot in early 2023, so total users could be 150M by now. The pessimistic lens is that 100M was a peak, and user numbers have actually declined since the high-fever of Feb/Mar. The $240M projection also assumes no churn, which never happens with subscription products. Average annual churn rates (albeit mostly B2B) are around 10-14%, which should ideally be factored in.

Stratechery has a great article on this framework and other thoughts coming out of Google I/O

One kind of fun aside is the sheer success of the original “Attention is All You Need” authors later on. Aidan Gomez went on to found Cohere, with Adept and Character AI springing from others.

Technically not commercially usable due to its limited license, but regardless drove a massive amount of subsequent academic research.

UNLESS some mad-lad company (cough cough Meta) decides to truly match the spending and dedication of companies like OpenAI and Google in creating competitive frontier models that are completely open-source, all in the spirit of commoditizing the space. There’s a non-zero chance this could happen, which would be an exciting world to see. In this world, OpenAI really wouldn’t have a model moat, and would need to compete on pure product experience and/or cost (i.e. economies of scale on data infra + model efficiency developments).